A Large-Scale Force-Feedback Robotic Interface for Non-Deterministic Scenarios in VR

CoVR is a physical column mounted on a 2D Cartesian ceiling robot to provide strong kinesthetic feedback (over 100N) in a room-scale VR arena.

The column panels are interchangeable and its movements can safely reach any location in the VR arena thanks to XY displacements and trajectory generations avoiding collisions with the user.

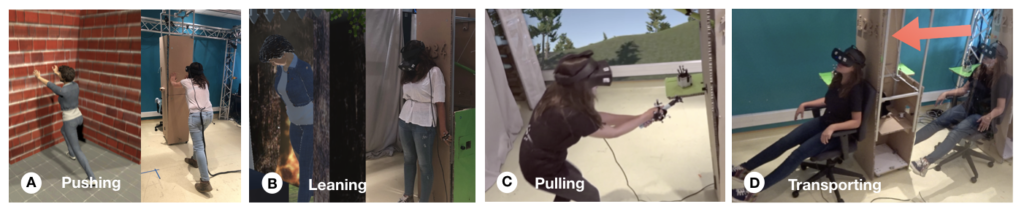

When CoVR is static, it can resist to body-scaled users’ actions, such as (A) users pushing on a static tangible rigid wall with a high force or (B) leaning on it.

When CoVR is dynamic, it can act on users. (C) CoVR can pull the users to provide large force-feedback or even (D) transport the users.

All the details regarding CoVR and the interactions it enables will be available soon. Nevertheless, the technical aspect of CoVR and its control are defined in the next paragraphs.

A UnityPackage to replicate our controls/models is available below.

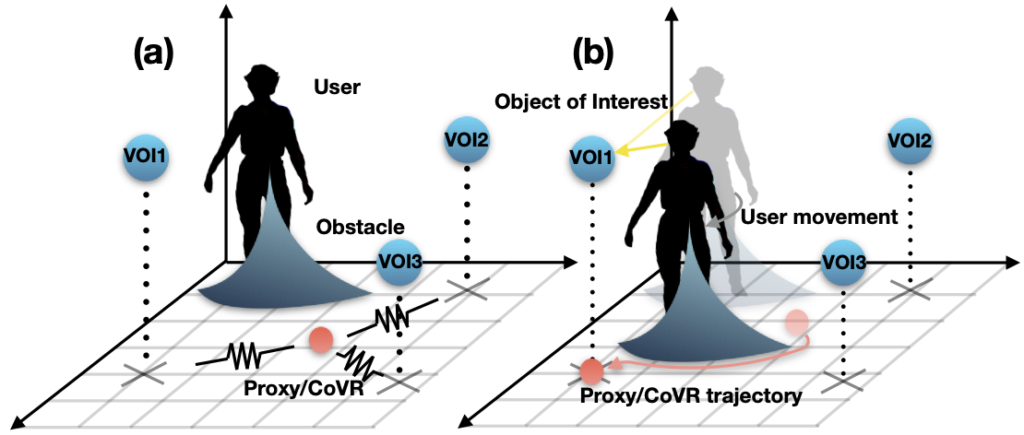

We present in the paper a model to control the robot displacements. While trajectories are easily generated by the Cartesian structure (XY displacements), the algorithm inputs for scenarios involving multiple objects of interest need to be defined and safety measures around the user need to be implemented.

The main idea is to attach the robot to a virtual proxy (a ball with mass and gravity) with a spring-damper model.

The ball’s displacements depend on (1) the user’s location to avoid collisions, (2) the user intentions and (3) the progress of the scenario to attract the ball towards the objects users are most likely to interact with next. A key contribution regarding our trajectory generation model is the elaboration of a low-computational user intention model working with common HMDs.

The 3D scenes from our technical evaluation (Data Collection and Simulation) are available by clicking on the following “Download” button. It consists of a UnityPackage containing:

- 3D scenes and models (user obstacle, proxy, scripts);

- data from our 6 participants;

- all the the simulation files;

- the attached Jupyter Notebook to analyse and compare the intention parameters.

Other intention models can be implemented by changing the equation in the script “Object_Of_Interest”.